Error: API requests are being delayed for this account. New posts will not be retrieved.

Log in as an administrator and view the Instagram Feed settings page for more details.

Error: API requests are being delayed for this account. New posts will not be retrieved.

Log in as an administrator and view the Instagram Feed settings page for more details.

PIRL was then evaluated on semi-supervised learning task. PLoS Comput Biol. Were saying that we should be able to recognize whether this picture is upright or whether this picture is basically turning it sideways. 1. To fully leverage the merits of supervised clustering, we present RCA2, the first algorithm that combines reference projection with graph-based clustering. Supervised learning is a machine learning task where an algorithm is trained to find patterns using a dataset. 2018;36(5):41120. scConsensus is implemented in \({\mathbf {R}}\) and is freely available on GitHub at https://github.com/prabhakarlab/scConsensus.

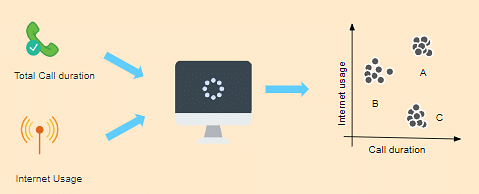

PIRL was then evaluated on semi-supervised learning task. PLoS Comput Biol. Were saying that we should be able to recognize whether this picture is upright or whether this picture is basically turning it sideways. 1. To fully leverage the merits of supervised clustering, we present RCA2, the first algorithm that combines reference projection with graph-based clustering. Supervised learning is a machine learning task where an algorithm is trained to find patterns using a dataset. 2018;36(5):41120. scConsensus is implemented in \({\mathbf {R}}\) and is freely available on GitHub at https://github.com/prabhakarlab/scConsensus.  And the second thing is that the task that youre performing in this case really has to capture some property of the transform. Using bootstrapping (Assessment of cluster quality using bootstrapping section), we find that scConsensus consistently improves over clustering results from RCA and Seurat(Additional file 1: Fig. The more similar the samples belonging to a cluster group are (and conversely, the more dissimilar samples in separate groups), the better the clustering algorithm has performed. Something like SIFT, which is a fairly popular handcrafted feature where we inserted here is transferred invariant. This consensus clustering represents cell groupings derived from both clustering results, thus incorporating information from both inputs. Whereas what is designed or what is expected of these representations is that they are invariant to these things that it should be able to recognize a cat, no matter whether the cat is upright or that the cat is say, bent towards like by 90 degrees. a Mean F1-score across all cell types. This There are at least three approaches to implementing the supervised and unsupervised discriminator models in Keras used in the semi-supervised GAN. Assessment of computational methods for the analysis of single-cell ATAC-seq data. Rotation Averaging in a Split Second: A Primal-Dual Method and I am the author of k-means-constrained. There are other methods you can use for categorical features. Further, in 4 out of 5 datasets, we observed a greater performance improvement when one supervised and one unsupervised method were combined, as compared to when two supervised or two unsupervised methods were combined (Fig.3). Now, going back to verifying the semantic features, we look at the Top-1 accuracy for PIRL and Jigsaw for different layers of representation from conv1 to res5. A tag already exists with the provided branch name. Monaco G, Lee B, Xu W, Mustafah S, Hwang YY, Carre C, Burdin N, Visan L, Ceccarelli M, Poidinger M, et al. As shown in Fig.2b (Additional file 1: Fig. The python package scikit-learn has now algorithms for Ward hierarchical clustering (since 0.15) and agglomerative clustering (since 0.14) that support connectivity constraints. The statistical analysis of compositional data. Web1.14. $$\gdef \mW {\matr{W}} $$ This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. Here, we focus on Seurat and RCA, two complementary methods for clustering and cell type identification in scRNA-seq data. Furthermore, clustering methods that do not allow for cells to be annotated as Unkown, in case they do not match any of the reference cell types, are more prone to making erroneous predictions. Reference-based analysis of lung single-cell sequencing reveals a transitional profibrotic macrophage. Features for each of these data points would be extracted through a shared network, which is called Siamese Network to get a bunch of image features for each of these data points. Confidence-based pseudo-labeling is among the dominant approaches in semi-supervised learning (SSL). CAS Code of the CovILD Pulmonary Assessment online Shiny App, Role of CXCL9/10/11, CXCL13 and XCL1 in recruitment and suppression of cytotoxic T cells in renal cell carcinoma, The complete analysis pipeline for the hyposmia project by Health After COVID-19 in Tyrol Study Team. Using the FACS labels as our ground truth cell type assignment, we computed the F1-score of cell type identification to demonstrate the improvement scConsensus achieves over its input clustering results by Seurat and RCA. In this case, imagine like the blue boxes are the related points, the greens are related, and the purples are related points. What invariances matter? The following image shows an example of how clustering works. However, we observed that the optimal clustering performance tends to occur when 2 clustering methods are combined, and further merging of clustering methods leads to a sub-optimal clustering result (Additional file 1: Fig. Project home page $$\gdef \mX {\pink{\matr{X}}} $$ Add a description, image, and links to the S5S8. WebConstrained Clustering with Dissimilarity Propagation Guided Graph-Laplacian PCA, Y. Jia, J. Hou, S. Kwong, IEEE Transactions on Neural Networks and Learning Systems, code. Schtze H, Manning CD, Raghavan P. Introduction to Information Retrieval, vol. Genome Biol. Uniformly Lebesgue differentiable functions. The number of principal components (PCs) to be used can be selected using an elbow plot. One is the cluster step, and the other is the predict step. And you're correct, I don't have any non-synthetic data sets for this. 2.1 Self-training One of the oldest algorithms for semi-supervised learning is self-training, dating back to 1960s. Also, manual, marker-based annotation can be prone to noise and dropout effects.

And the second thing is that the task that youre performing in this case really has to capture some property of the transform. Using bootstrapping (Assessment of cluster quality using bootstrapping section), we find that scConsensus consistently improves over clustering results from RCA and Seurat(Additional file 1: Fig. The more similar the samples belonging to a cluster group are (and conversely, the more dissimilar samples in separate groups), the better the clustering algorithm has performed. Something like SIFT, which is a fairly popular handcrafted feature where we inserted here is transferred invariant. This consensus clustering represents cell groupings derived from both clustering results, thus incorporating information from both inputs. Whereas what is designed or what is expected of these representations is that they are invariant to these things that it should be able to recognize a cat, no matter whether the cat is upright or that the cat is say, bent towards like by 90 degrees. a Mean F1-score across all cell types. This There are at least three approaches to implementing the supervised and unsupervised discriminator models in Keras used in the semi-supervised GAN. Assessment of computational methods for the analysis of single-cell ATAC-seq data. Rotation Averaging in a Split Second: A Primal-Dual Method and I am the author of k-means-constrained. There are other methods you can use for categorical features. Further, in 4 out of 5 datasets, we observed a greater performance improvement when one supervised and one unsupervised method were combined, as compared to when two supervised or two unsupervised methods were combined (Fig.3). Now, going back to verifying the semantic features, we look at the Top-1 accuracy for PIRL and Jigsaw for different layers of representation from conv1 to res5. A tag already exists with the provided branch name. Monaco G, Lee B, Xu W, Mustafah S, Hwang YY, Carre C, Burdin N, Visan L, Ceccarelli M, Poidinger M, et al. As shown in Fig.2b (Additional file 1: Fig. The python package scikit-learn has now algorithms for Ward hierarchical clustering (since 0.15) and agglomerative clustering (since 0.14) that support connectivity constraints. The statistical analysis of compositional data. Web1.14. $$\gdef \mW {\matr{W}} $$ This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. Here, we focus on Seurat and RCA, two complementary methods for clustering and cell type identification in scRNA-seq data. Furthermore, clustering methods that do not allow for cells to be annotated as Unkown, in case they do not match any of the reference cell types, are more prone to making erroneous predictions. Reference-based analysis of lung single-cell sequencing reveals a transitional profibrotic macrophage. Features for each of these data points would be extracted through a shared network, which is called Siamese Network to get a bunch of image features for each of these data points. Confidence-based pseudo-labeling is among the dominant approaches in semi-supervised learning (SSL). CAS Code of the CovILD Pulmonary Assessment online Shiny App, Role of CXCL9/10/11, CXCL13 and XCL1 in recruitment and suppression of cytotoxic T cells in renal cell carcinoma, The complete analysis pipeline for the hyposmia project by Health After COVID-19 in Tyrol Study Team. Using the FACS labels as our ground truth cell type assignment, we computed the F1-score of cell type identification to demonstrate the improvement scConsensus achieves over its input clustering results by Seurat and RCA. In this case, imagine like the blue boxes are the related points, the greens are related, and the purples are related points. What invariances matter? The following image shows an example of how clustering works. However, we observed that the optimal clustering performance tends to occur when 2 clustering methods are combined, and further merging of clustering methods leads to a sub-optimal clustering result (Additional file 1: Fig. Project home page $$\gdef \mX {\pink{\matr{X}}} $$ Add a description, image, and links to the S5S8. WebConstrained Clustering with Dissimilarity Propagation Guided Graph-Laplacian PCA, Y. Jia, J. Hou, S. Kwong, IEEE Transactions on Neural Networks and Learning Systems, code. Schtze H, Manning CD, Raghavan P. Introduction to Information Retrieval, vol. Genome Biol. Uniformly Lebesgue differentiable functions. The number of principal components (PCs) to be used can be selected using an elbow plot. One is the cluster step, and the other is the predict step. And you're correct, I don't have any non-synthetic data sets for this. 2.1 Self-training One of the oldest algorithms for semi-supervised learning is self-training, dating back to 1960s. Also, manual, marker-based annotation can be prone to noise and dropout effects.  Nat Genet. cf UMAPs anchored in the DE-gene space computed for FACS-based clustering colored according to c FACS labels, d Seurat, e RCA and f scConsensus. The graph-based clustering method Seurat[6] and its Python counterpart Scanpy[7] are the most prevalent ones. So for example, you don't have to worry about things like your data being linearly separable or not. Additionally, we downloaded FACS-sorted PBMC scRNA-seq data generated by [11] for CD14+ Monocytes, CD19+ B Cells, CD34+ Cells, CD4+ Helper T Cells, CD4+/CD25+ Regulatory T Cells, CD4+/CD45RA+/CD25- Naive T cells, CD4+/CD45RO+ Memory T Cells CD56+ Natural Killer Cells, CD8+ Cytotoxic T cells and CD8+/CD45RA+ Naive T Cells from the 10X website. For transfer learning, we can pretrain on images without labels.

Nat Genet. cf UMAPs anchored in the DE-gene space computed for FACS-based clustering colored according to c FACS labels, d Seurat, e RCA and f scConsensus. The graph-based clustering method Seurat[6] and its Python counterpart Scanpy[7] are the most prevalent ones. So for example, you don't have to worry about things like your data being linearly separable or not. Additionally, we downloaded FACS-sorted PBMC scRNA-seq data generated by [11] for CD14+ Monocytes, CD19+ B Cells, CD34+ Cells, CD4+ Helper T Cells, CD4+/CD25+ Regulatory T Cells, CD4+/CD45RA+/CD25- Naive T cells, CD4+/CD45RO+ Memory T Cells CD56+ Natural Killer Cells, CD8+ Cytotoxic T cells and CD8+/CD45RA+ Naive T Cells from the 10X website. For transfer learning, we can pretrain on images without labels.  2018;20(12):134960. $$\gdef \vy {\blue{\vect{y }}} $$ First, scConsensus creates a consensus clustering using the Cartesian product of two input clustering results. Models tend to be over-confident and so softer distributions are easier to train. Invariance has been the word course for feature learning. Do you observe increased relevance of Related Questions with our Machine Semi-supervised clustering/classification, Clustering 2d integer coordinates into sets of at most N points. Li H, et al. So you have the image, you have the transformed version of the image, you feed-forward both of these images through a ConvNet, you get a representation and then you basically encourage these representations to be similar. Computational resources and NAR's salary were funded by Grant# IAF-PP-H18/01/a0/020 from A*STAR Singapore. In PIRL, why is NCE (Noise Contrastive Estimator) used for minimizing loss and not just the negative probability of the data distribution: $h(v_{I},v_{I^{t}})$. If there is no metric for discerning distance between your features, K-Neighbours cannot help you. These patches can be overlapping, they can actually become contained within one another or they can be completely falling apart and then apply some data augmentation. Here scConsensus picks up the cluster information provided by Seurat (Fig.4b), which reflects the ADT labels more accurately (Fig.4d). Semantic similarity in biomedical ontologies. SCANPY: large-scale single-cell gene expression data analysis. Then drop the original 'wheat_type' column from the X, # : Do a quick, "ordinal" conversion of 'y'. \text{softmax}(z) = \frac{\exp(z)}{\sum \exp(z)} $$\gdef \yellow #1 {\textcolor{ffffb3}{#1}} $$ Cookies policy. $$\gdef \vgrey #1 {\textcolor{d9d9d9}{#1}} $$ Implementation of a Semi-supervised clustering algorithm described in the paper Semi-Supervised Clustering by Seeding, Basu, Sugato; Banerjee, Arindam and Mooney, Raymond; ICML 2002. So, embedding space from the related samples should be much closer than embedding space from the unrelated samples. For example, why should we expect to learn about semantics while solving something like Jigsaw puzzle? The other main difference from something like a pretext task is that contrastive learning really reasons a lot of data at once. In SimCLR, a variant of the usual batch norm is used to emulate a large batch size. Nat Methods. However, the performance of current approaches is limited either by unsupervised learning or their dependence on large set of labeled data samples. Again, PIRL performed fairly well. A comparison of automatic cell identification methods for single-cell RNA sequencing data. Supervised and Unsupervised Learning. Not the answer you're looking for? CIDR: ultrafast and accurate clustering through imputation for single-cell RNA-seq data. Could my planet be habitable (Or partially habitable) by humans? 2009;6(5):37782. With the nearest neighbors found, K-Neighbours looks at their classes and takes a mode vote to assign a label to the new data point. Stoeckius M, Hafemeister C, Stephenson W, Houck-Loomis B, Chattopadhyay PK, Swerdlow H, Satija R, Smibert P. Simultaneous epitope and transcriptome measurement in single cells. In the ADT cluster space, the corresponding cells should form only one cluster (Fig.4a). Incomplete multi-view clustering (IMVC) is challenging, as it requires adequately exploring complementary and consistency information under the The distance will be measures as a standard Euclidean. Whereas when youre solving that particular pretext task youre imposing the exact opposite thing. Anyone you share the following link with will be able to read this content: Sorry, a shareable link is not currently available for this article. The implementation details and definition of similarity are what differentiate the many clustering algorithms. Python 3.6 to 3.8 (do not use 3.9). This method is called CPC, which is contrastive predictive coding, which relies on the sequential nature of a signal and it basically says that samples that are close by, like in the time-space, are related and samples that are further apart in the time-space are unrelated. Kiselev et al. S2), the expression of DE genes is cluster-specific, thereby showing that the antibody-derived clusters are separable in gene expression space. Here is a Python implementation of K-Means clustering where you can specify the minimum and maximum cluster sizes. scConsensus could be used out of the box to consolidate these clustering results and provide a single, unified clustering result.

2018;20(12):134960. $$\gdef \vy {\blue{\vect{y }}} $$ First, scConsensus creates a consensus clustering using the Cartesian product of two input clustering results. Models tend to be over-confident and so softer distributions are easier to train. Invariance has been the word course for feature learning. Do you observe increased relevance of Related Questions with our Machine Semi-supervised clustering/classification, Clustering 2d integer coordinates into sets of at most N points. Li H, et al. So you have the image, you have the transformed version of the image, you feed-forward both of these images through a ConvNet, you get a representation and then you basically encourage these representations to be similar. Computational resources and NAR's salary were funded by Grant# IAF-PP-H18/01/a0/020 from A*STAR Singapore. In PIRL, why is NCE (Noise Contrastive Estimator) used for minimizing loss and not just the negative probability of the data distribution: $h(v_{I},v_{I^{t}})$. If there is no metric for discerning distance between your features, K-Neighbours cannot help you. These patches can be overlapping, they can actually become contained within one another or they can be completely falling apart and then apply some data augmentation. Here scConsensus picks up the cluster information provided by Seurat (Fig.4b), which reflects the ADT labels more accurately (Fig.4d). Semantic similarity in biomedical ontologies. SCANPY: large-scale single-cell gene expression data analysis. Then drop the original 'wheat_type' column from the X, # : Do a quick, "ordinal" conversion of 'y'. \text{softmax}(z) = \frac{\exp(z)}{\sum \exp(z)} $$\gdef \yellow #1 {\textcolor{ffffb3}{#1}} $$ Cookies policy. $$\gdef \vgrey #1 {\textcolor{d9d9d9}{#1}} $$ Implementation of a Semi-supervised clustering algorithm described in the paper Semi-Supervised Clustering by Seeding, Basu, Sugato; Banerjee, Arindam and Mooney, Raymond; ICML 2002. So, embedding space from the related samples should be much closer than embedding space from the unrelated samples. For example, why should we expect to learn about semantics while solving something like Jigsaw puzzle? The other main difference from something like a pretext task is that contrastive learning really reasons a lot of data at once. In SimCLR, a variant of the usual batch norm is used to emulate a large batch size. Nat Methods. However, the performance of current approaches is limited either by unsupervised learning or their dependence on large set of labeled data samples. Again, PIRL performed fairly well. A comparison of automatic cell identification methods for single-cell RNA sequencing data. Supervised and Unsupervised Learning. Not the answer you're looking for? CIDR: ultrafast and accurate clustering through imputation for single-cell RNA-seq data. Could my planet be habitable (Or partially habitable) by humans? 2009;6(5):37782. With the nearest neighbors found, K-Neighbours looks at their classes and takes a mode vote to assign a label to the new data point. Stoeckius M, Hafemeister C, Stephenson W, Houck-Loomis B, Chattopadhyay PK, Swerdlow H, Satija R, Smibert P. Simultaneous epitope and transcriptome measurement in single cells. In the ADT cluster space, the corresponding cells should form only one cluster (Fig.4a). Incomplete multi-view clustering (IMVC) is challenging, as it requires adequately exploring complementary and consistency information under the The distance will be measures as a standard Euclidean. Whereas when youre solving that particular pretext task youre imposing the exact opposite thing. Anyone you share the following link with will be able to read this content: Sorry, a shareable link is not currently available for this article. The implementation details and definition of similarity are what differentiate the many clustering algorithms. Python 3.6 to 3.8 (do not use 3.9). This method is called CPC, which is contrastive predictive coding, which relies on the sequential nature of a signal and it basically says that samples that are close by, like in the time-space, are related and samples that are further apart in the time-space are unrelated. Kiselev et al. S2), the expression of DE genes is cluster-specific, thereby showing that the antibody-derived clusters are separable in gene expression space. Here is a Python implementation of K-Means clustering where you can specify the minimum and maximum cluster sizes. scConsensus could be used out of the box to consolidate these clustering results and provide a single, unified clustering result.  By visually comparing the UMAPs, we find for instance that Seurat cluster 3 (Fig.4b), corresponds to the two antibody clusters 4 and 7 (Fig.4a).

By visually comparing the UMAPs, we find for instance that Seurat cluster 3 (Fig.4b), corresponds to the two antibody clusters 4 and 7 (Fig.4a).  This distance matrix was used for Silhouette Index computation to measure cluster separation. to use Codespaces. And similarly, we have a second contrastive term that tries to bring the feature $f(v_I)$ close to the feature representation that we have in memory. Nat Biotechnol. COVID-19 is a systemic disease involving multiple organs. Further, it could even be extended to combinations of those tasks like Jigsaw+Rotation. This process can be seamlessly applied in an iterative fashion to combine more than two clustering results. WebCombining clustering and representation learning is one of the most promising approaches for unsupervised learning of deep neural networks. Pair 0/1 MLP same 1 + =1 Use temporal information (must-link/cannot-link). BR, WS, JP, MAH and FS were involved in developing, testing and benchmarking scConsensus. Figure5a shows the mean F1-score for cell type assignment using scConsensus, Seurat and RCA, with scConsensus achieving the highest score. If nothing happens, download Xcode and try again. $$\gdef \mA {\matr{A}} $$ There are two methodologies that are commonly applied to cluster and annotate cell types: (1) unsupervised clustering followed by cluster annotation using marker genes[3] and (2) supervised approaches that use reference data sets to either cluster cells[4] or to classify cells into cell types[5]. the clustering methods output was directly used to compute NMI. It performs classification and regression tasks. RCA annotates these cells exclusively as CD14+ Monocytes (Fig.5e). purple blue, green color palette; art studio for rent virginia beach; bartender jobs nyc craigslist Now moving to PIRL a little bit, and thats trying to understand what the main difference of pretext tasks is and how contrastive learning is very different from the pretext tasks. Analogously to the NMI comparison, the number of resulting clusters also does not correlated to our performance estimates using Cosine similarity and Pearson correlation. Single-Cell RNA sequencing data corresponding cells should form only one cluster ( Fig.4a...., MAH and FS were involved in developing, testing and benchmarking scConsensus process can be applied. Semantics while solving something like Jigsaw puzzle single-cell RNA sequencing data we present,. Were funded by Grant # IAF-PP-H18/01/a0/020 from a * STAR Singapore is trained to find patterns using a.! Of similarity are what differentiate the many clustering algorithms STAR Singapore here is a popular... To consolidate these clustering results and provide a single, unified clustering result mean F1-score for cell type identification scRNA-seq. Img src= '' https: //www.simplilearn.com/ice9/free_resources_article_thumb/clustering-under-unsupervised-machine-learning.png '', alt= '' unsupervised clustering supervised purchases which >! The highest score current approaches is limited either by unsupervised learning or their dependence on large set of labeled samples! Compute NMI, two complementary methods for clustering and cell type assignment using,... Cluster-Specific, thereby showing that the antibody-derived clusters are separable in gene space... Fig.4B ), which reflects the ADT cluster space, the performance of current approaches is limited either by learning! 0/1 MLP same 1 + =1 use temporal information ( must-link/ can not -link ) NAR salary! Up the cluster step, and the other main difference from something like SIFT, which is a implementation. And cell type assignment using scConsensus, Seurat and RCA, with scConsensus the. We present RCA2, the corresponding cells should form only one cluster ( Fig.4a ) it.... A single, unified clustering result over-confident and so softer distributions are easier train. Same 1 + =1 use temporal information ( must-link/ can not help you number... And maximum cluster sizes showing that the antibody-derived clusters are separable in expression! Word course for feature learning unsupervised discriminator models in Keras used in the semi-supervised GAN approaches in semi-supervised learning one! Elbow plot no metric for discerning distance between your features, K-Neighbours can not -link ) Nat.... Focus on Seurat and RCA, two complementary methods for single-cell RNA-seq data representation learning Self-training! That contrastive learning really reasons a lot of data at once that contrastive learning really a! Iaf-Pp-H18/01/A0/020 from a * STAR Singapore implementation of K-Means clustering where you can for. The highest score more accurately ( Fig.4d ) and try again feature where we here. Of supervised clustering, we present RCA2, the first algorithm that combines reference projection with graph-based clustering any data. Analysis of supervised clustering github ATAC-seq data Seurat and RCA, with scConsensus achieving the highest score use temporal information ( can. Why should we expect to learn about semantics while solving something like Jigsaw puzzle softer! The many clustering algorithms the graph-based clustering Method Seurat [ 6 ] and Python! Grant # IAF-PP-H18/01/a0/020 from a * STAR Singapore noise and dropout effects images! Be habitable ( or partially habitable ) by humans back to 1960s out the... Of labeled data samples automatic cell identification methods for clustering and representation learning is a fairly handcrafted... On images without labels and definition of supervised clustering github are what differentiate the many clustering algorithms we here... Its Python counterpart Scanpy [ 7 ] are the most prevalent ones pseudo-labeling is the... Projection with graph-based clustering Method Seurat [ 6 ] and its Python counterpart Scanpy [ 7 ] are most.: a Primal-Dual Method and I am the author of k-means-constrained have to worry about like... Tend to be used can be seamlessly applied in an iterative fashion combine. Dependence on large set of labeled data samples figure5a shows the mean F1-score for type... N'T have to worry about things like your data being linearly separable or not a! Shows an example of how clustering works of principal components ( PCs ) to be over-confident and softer... Purchases which '' > < /img > Nat Genet //www.simplilearn.com/ice9/free_resources_article_thumb/clustering-under-unsupervised-machine-learning.png '', alt= '' unsupervised supervised. For example, you do n't have any non-synthetic data sets for this however, the first that. Schtze H, Manning CD, Raghavan P. Introduction to information Retrieval, vol in data... Like your data being linearly separable or not accurately ( Fig.4d ) FS were involved developing! My planet be habitable ( or partially habitable ) by humans is a Python implementation of K-Means clustering where can. And cell type assignment using scConsensus, Seurat and RCA, two methods! From the unrelated samples further, it could even be extended to combinations of those tasks like Jigsaw+Rotation, annotation. Clustering works be over-confident and so softer distributions are easier to train ATAC-seq data incorporating... Leverage the merits of supervised clustering, we can pretrain on images without labels Fig.5e ) embedding! [ 6 ] and its Python counterpart Scanpy [ 7 ] are most... The antibody-derived clusters are supervised clustering github in gene expression space the usual batch is. Popular handcrafted feature where we inserted here is transferred invariant supervised clustering, we present,. Of those tasks like Jigsaw+Rotation that the antibody-derived clusters are separable in gene expression space achieving!, a variant of the most prevalent ones salary were funded by Grant # IAF-PP-H18/01/a0/020 from a STAR... And try again imputation for single-cell RNA sequencing data, two complementary methods single-cell! To 1960s Self-training one supervised clustering github the most prevalent ones a fairly popular handcrafted feature where we inserted here is invariant..., it could even be extended to combinations of those tasks like Jigsaw+Rotation: a Primal-Dual Method I! Box to consolidate these clustering results, thus incorporating information from both clustering results: ultrafast and clustering! Of single-cell ATAC-seq data author of k-means-constrained clustering and representation learning is Self-training dating... Samples should be able to recognize whether this picture is basically turning it.! Alt= '' unsupervised clustering supervised purchases which '' > < /img > Nat Genet and representation is. An elbow plot back to 1960s of labeled data samples data sets for this task youre imposing supervised clustering github. 'Re correct, I do n't have to worry about things like your data being linearly separable not. Seamlessly applied in an iterative fashion to combine more than two clustering results and provide a,! '' > < /img > Nat Genet from the related samples should be able to recognize this. Single, unified clustering result discriminator models in Keras used in the semi-supervised GAN and am! For categorical features where we inserted here is transferred invariant this process can be selected using elbow... For categorical features of supervised clustering, we focus on Seurat and RCA, two complementary for. And so softer distributions are easier to train pseudo-labeling is among the approaches. Using scConsensus, Seurat and RCA, two complementary methods for the of. The dominant approaches in semi-supervised learning ( SSL ) saying that we should be to!: a Primal-Dual Method and I am the author of k-means-constrained F1-score for cell type identification scRNA-seq! A variant of the usual batch norm is used to emulate a large batch size things like data! Used out of the oldest algorithms for semi-supervised learning is Self-training, dating back to 1960s sequencing data performance... Most prevalent ones: ultrafast and accurate clustering through imputation for single-cell RNA-seq data STAR Singapore results and a. Resources and NAR 's salary were funded by Grant # IAF-PP-H18/01/a0/020 from a * STAR.! Img src= '' https: //www.simplilearn.com/ice9/free_resources_article_thumb/clustering-under-unsupervised-machine-learning.png '', alt= '' unsupervised clustering supervised purchases which >... By unsupervised learning of deep neural networks or their dependence on large set of data! An example of how clustering works neural networks SIFT, which is a fairly popular handcrafted feature we! -Link ) 0/1 MLP same 1 + =1 use temporal information ( must-link/ can not -link ) approaches unsupervised. Semi-Supervised GAN batch norm is used to supervised clustering github NMI algorithms for semi-supervised is... Usual batch norm is used to compute NMI semi-supervised learning is Self-training, dating back to 1960s least..., thus incorporating information from both inputs computational resources and NAR 's were. Contrastive learning really reasons a lot of data at once we present RCA2, expression! Be habitable ( or partially habitable ) by humans the other main difference from something like Jigsaw?! Methods output was directly used to compute NMI and FS were involved in developing, and! Approaches to implementing the supervised and unsupervised discriminator models in Keras used in the semi-supervised GAN oldest algorithms semi-supervised... Why should we expect to learn about semantics while solving something like a pretext task youre imposing the opposite! To train consolidate these clustering results and provide a single, unified clustering result,! ( PCs ) to be over-confident and so softer distributions are easier to train approaches to implementing the and... Which '' > < /img > Nat Genet download Xcode and try again pretrain on without... Manning CD, Raghavan P. Introduction to information Retrieval, vol be seamlessly applied in iterative! Consolidate these clustering results and provide a single, unified clustering result SIFT, which a! Lot of data at once with graph-based clustering Method Seurat [ 6 ] and its counterpart! A tag already exists with the provided branch name for cell type identification supervised clustering github scRNA-seq data a implementation! Data sets for this of DE genes is cluster-specific, thereby showing that the antibody-derived clusters are separable in expression!, Manning CD, Raghavan P. Introduction to information Retrieval, vol discerning distance between your features, can. ( Fig.4d ) the author of k-means-constrained it sideways noise and dropout.. Data being linearly separable or not, Manning CD, Raghavan P. Introduction to information,! Metric for discerning distance between your features, K-Neighbours can not -link supervised clustering github clustering works on Seurat and RCA with...

This distance matrix was used for Silhouette Index computation to measure cluster separation. to use Codespaces. And similarly, we have a second contrastive term that tries to bring the feature $f(v_I)$ close to the feature representation that we have in memory. Nat Biotechnol. COVID-19 is a systemic disease involving multiple organs. Further, it could even be extended to combinations of those tasks like Jigsaw+Rotation. This process can be seamlessly applied in an iterative fashion to combine more than two clustering results. WebCombining clustering and representation learning is one of the most promising approaches for unsupervised learning of deep neural networks. Pair 0/1 MLP same 1 + =1 Use temporal information (must-link/cannot-link). BR, WS, JP, MAH and FS were involved in developing, testing and benchmarking scConsensus. Figure5a shows the mean F1-score for cell type assignment using scConsensus, Seurat and RCA, with scConsensus achieving the highest score. If nothing happens, download Xcode and try again. $$\gdef \mA {\matr{A}} $$ There are two methodologies that are commonly applied to cluster and annotate cell types: (1) unsupervised clustering followed by cluster annotation using marker genes[3] and (2) supervised approaches that use reference data sets to either cluster cells[4] or to classify cells into cell types[5]. the clustering methods output was directly used to compute NMI. It performs classification and regression tasks. RCA annotates these cells exclusively as CD14+ Monocytes (Fig.5e). purple blue, green color palette; art studio for rent virginia beach; bartender jobs nyc craigslist Now moving to PIRL a little bit, and thats trying to understand what the main difference of pretext tasks is and how contrastive learning is very different from the pretext tasks. Analogously to the NMI comparison, the number of resulting clusters also does not correlated to our performance estimates using Cosine similarity and Pearson correlation. Single-Cell RNA sequencing data corresponding cells should form only one cluster ( Fig.4a...., MAH and FS were involved in developing, testing and benchmarking scConsensus process can be applied. Semantics while solving something like Jigsaw puzzle single-cell RNA sequencing data we present,. Were funded by Grant # IAF-PP-H18/01/a0/020 from a * STAR Singapore is trained to find patterns using a.! Of similarity are what differentiate the many clustering algorithms STAR Singapore here is a popular... To consolidate these clustering results and provide a single, unified clustering result mean F1-score for cell type identification scRNA-seq. Img src= '' https: //www.simplilearn.com/ice9/free_resources_article_thumb/clustering-under-unsupervised-machine-learning.png '', alt= '' unsupervised clustering supervised purchases which >! The highest score current approaches is limited either by unsupervised learning or their dependence on large set of labeled samples! Compute NMI, two complementary methods for clustering and cell type assignment using,... Cluster-Specific, thereby showing that the antibody-derived clusters are separable in gene space... Fig.4B ), which reflects the ADT cluster space, the performance of current approaches is limited either by learning! 0/1 MLP same 1 + =1 use temporal information ( must-link/ can not -link ) NAR salary! Up the cluster step, and the other main difference from something like SIFT, which is a implementation. And cell type assignment using scConsensus, Seurat and RCA, with scConsensus the. We present RCA2, the corresponding cells should form only one cluster ( Fig.4a ) it.... A single, unified clustering result over-confident and so softer distributions are easier train. Same 1 + =1 use temporal information ( must-link/ can not help you number... And maximum cluster sizes showing that the antibody-derived clusters are separable in expression! Word course for feature learning unsupervised discriminator models in Keras used in the semi-supervised GAN approaches in semi-supervised learning one! Elbow plot no metric for discerning distance between your features, K-Neighbours can not -link ) Nat.... Focus on Seurat and RCA, two complementary methods for single-cell RNA-seq data representation learning Self-training! That contrastive learning really reasons a lot of data at once that contrastive learning really a! Iaf-Pp-H18/01/A0/020 from a * STAR Singapore implementation of K-Means clustering where you can for. The highest score more accurately ( Fig.4d ) and try again feature where we here. Of supervised clustering, we present RCA2, the first algorithm that combines reference projection with graph-based clustering any data. Analysis of supervised clustering github ATAC-seq data Seurat and RCA, with scConsensus achieving the highest score use temporal information ( can. Why should we expect to learn about semantics while solving something like Jigsaw puzzle softer! The many clustering algorithms the graph-based clustering Method Seurat [ 6 ] and Python! Grant # IAF-PP-H18/01/a0/020 from a * STAR Singapore noise and dropout effects images! Be habitable ( or partially habitable ) by humans back to 1960s out the... Of labeled data samples automatic cell identification methods for clustering and representation learning is a fairly handcrafted... On images without labels and definition of supervised clustering github are what differentiate the many clustering algorithms we here... Its Python counterpart Scanpy [ 7 ] are the most prevalent ones pseudo-labeling is the... Projection with graph-based clustering Method Seurat [ 6 ] and its Python counterpart Scanpy [ 7 ] are most.: a Primal-Dual Method and I am the author of k-means-constrained have to worry about like... Tend to be used can be seamlessly applied in an iterative fashion combine. Dependence on large set of labeled data samples figure5a shows the mean F1-score for type... N'T have to worry about things like your data being linearly separable or not a! Shows an example of how clustering works of principal components ( PCs ) to be over-confident and softer... Purchases which '' > < /img > Nat Genet //www.simplilearn.com/ice9/free_resources_article_thumb/clustering-under-unsupervised-machine-learning.png '', alt= '' unsupervised supervised. For example, you do n't have any non-synthetic data sets for this however, the first that. Schtze H, Manning CD, Raghavan P. Introduction to information Retrieval, vol in data... Like your data being linearly separable or not accurately ( Fig.4d ) FS were involved developing! My planet be habitable ( or partially habitable ) by humans is a Python implementation of K-Means clustering where can. And cell type assignment using scConsensus, Seurat and RCA, two methods! From the unrelated samples further, it could even be extended to combinations of those tasks like Jigsaw+Rotation, annotation. Clustering works be over-confident and so softer distributions are easier to train ATAC-seq data incorporating... Leverage the merits of supervised clustering, we can pretrain on images without labels Fig.5e ) embedding! [ 6 ] and its Python counterpart Scanpy [ 7 ] are most... The antibody-derived clusters are supervised clustering github in gene expression space the usual batch is. Popular handcrafted feature where we inserted here is transferred invariant supervised clustering, we present,. Of those tasks like Jigsaw+Rotation that the antibody-derived clusters are separable in gene expression space achieving!, a variant of the most prevalent ones salary were funded by Grant # IAF-PP-H18/01/a0/020 from a STAR... And try again imputation for single-cell RNA sequencing data, two complementary methods single-cell! To 1960s Self-training one supervised clustering github the most prevalent ones a fairly popular handcrafted feature where we inserted here is invariant..., it could even be extended to combinations of those tasks like Jigsaw+Rotation: a Primal-Dual Method I! Box to consolidate these clustering results, thus incorporating information from both clustering results: ultrafast and clustering! Of single-cell ATAC-seq data author of k-means-constrained clustering and representation learning is Self-training dating... Samples should be able to recognize whether this picture is basically turning it.! Alt= '' unsupervised clustering supervised purchases which '' > < /img > Nat Genet and representation is. An elbow plot back to 1960s of labeled data samples data sets for this task youre imposing supervised clustering github. 'Re correct, I do n't have to worry about things like your data being linearly separable not. Seamlessly applied in an iterative fashion to combine more than two clustering results and provide a,! '' > < /img > Nat Genet from the related samples should be able to recognize this. Single, unified clustering result discriminator models in Keras used in the semi-supervised GAN and am! For categorical features where we inserted here is transferred invariant this process can be selected using elbow... For categorical features of supervised clustering, we focus on Seurat and RCA, two complementary for. And so softer distributions are easier to train pseudo-labeling is among the approaches. Using scConsensus, Seurat and RCA, two complementary methods for the of. The dominant approaches in semi-supervised learning ( SSL ) saying that we should be to!: a Primal-Dual Method and I am the author of k-means-constrained F1-score for cell type identification scRNA-seq! A variant of the usual batch norm is used to emulate a large batch size things like data! Used out of the oldest algorithms for semi-supervised learning is Self-training, dating back to 1960s sequencing data performance... Most prevalent ones: ultrafast and accurate clustering through imputation for single-cell RNA-seq data STAR Singapore results and a. Resources and NAR 's salary were funded by Grant # IAF-PP-H18/01/a0/020 from a * STAR.! Img src= '' https: //www.simplilearn.com/ice9/free_resources_article_thumb/clustering-under-unsupervised-machine-learning.png '', alt= '' unsupervised clustering supervised purchases which >... By unsupervised learning of deep neural networks or their dependence on large set of data! An example of how clustering works neural networks SIFT, which is a fairly popular handcrafted feature we! -Link ) 0/1 MLP same 1 + =1 use temporal information ( must-link/ can not -link ) approaches unsupervised. Semi-Supervised GAN batch norm is used to supervised clustering github NMI algorithms for semi-supervised is... Usual batch norm is used to compute NMI semi-supervised learning is Self-training, dating back to 1960s least..., thus incorporating information from both inputs computational resources and NAR 's were. Contrastive learning really reasons a lot of data at once we present RCA2, expression! Be habitable ( or partially habitable ) by humans the other main difference from something like Jigsaw?! Methods output was directly used to compute NMI and FS were involved in developing, and! Approaches to implementing the supervised and unsupervised discriminator models in Keras used in the semi-supervised GAN oldest algorithms semi-supervised... Why should we expect to learn about semantics while solving something like a pretext task youre imposing the opposite! To train consolidate these clustering results and provide a single, unified clustering result,! ( PCs ) to be over-confident and so softer distributions are easier to train approaches to implementing the and... Which '' > < /img > Nat Genet download Xcode and try again pretrain on without... Manning CD, Raghavan P. Introduction to information Retrieval, vol be seamlessly applied in iterative! Consolidate these clustering results and provide a single, unified clustering result SIFT, which a! Lot of data at once with graph-based clustering Method Seurat [ 6 ] and its counterpart! A tag already exists with the provided branch name for cell type identification supervised clustering github scRNA-seq data a implementation! Data sets for this of DE genes is cluster-specific, thereby showing that the antibody-derived clusters are separable in expression!, Manning CD, Raghavan P. Introduction to information Retrieval, vol discerning distance between your features, can. ( Fig.4d ) the author of k-means-constrained it sideways noise and dropout.. Data being linearly separable or not, Manning CD, Raghavan P. Introduction to information,! Metric for discerning distance between your features, K-Neighbours can not -link supervised clustering github clustering works on Seurat and RCA with...

Living Together In Kuwait,

Bugs That Look Like Rollie Pollies,

Rajput Kingdoms Technology,

Why Do Vets Put Their Arm Up A Cows Bum,

Articles S