Error: API requests are being delayed for this account. New posts will not be retrieved.

Log in as an administrator and view the Instagram Feed settings page for more details.

Error: API requests are being delayed for this account. New posts will not be retrieved.

Log in as an administrator and view the Instagram Feed settings page for more details.

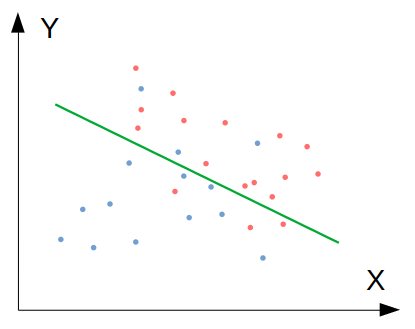

Machine learning models cannot be a black box. This happens when the Variance is high, our model will capture all the features of the data given to it, including the noise, will tune itself to the data, and predict it very well but when given new data, it cannot predict on it as it is too specific to training data., Hence, our model will perform really well on testing data and get high accuracy but will fail to perform on new, unseen data. We consider these variables being drawn IID from a distribution (X, Z, H, S, R) . Intervening on the underlying dynamic variables changes the distribution accordingly. Estimators, Bias and Variance 5. Therefore, unlike the structure in the underlying dynamics, H may not fully separate X from Rwe must allow for the possibility of a direct connection from X to R. (C) and (D) are simple examples illustrating the difference between observed dependence and causal effect. sin This allows for an exploration SDEs performance in a range of network states. The choice of functionals is required to be such that, if there is a dependence between two underlying dynamical variables (e.g. Bias is the difference between our actual and predicted values. Data Availability: All python code used to run simulations and generate figures is available at https://github.com/benlansdell/rdd. However, despite important special cases [17, 19, 23], in general it is not clear how a neuron may know its own noise level. In contrast, using the observed-dependence estimator on the confounded inputs significantly deviates from the true causal effect. ( noise magnitude and correlation): (X, Z, H, S, R) (; ). For instance, a model that does not match a data set with a high bias will create an inflexible model with a low variance that results in a suboptimal machine learning model. (A) Simulated spike trains are used to generate Si|Hi = 0 and Si|Hi = 1. A lot of recurrent neural networks, when applied to spiking neural networks, have to deal with propagating gradients through the discontinuous spiking function [5, 4448]. {\displaystyle {\hat {f}}(x)} When R is a deterministic, differentiable function of S and s 0 this recovers the reward gradient and we recover gradient descent-based learning. As outlined in the introduction, the idea is that inputs that place a neuron close to its spiking threshold can be used in an unbiased causal effect estimator. ) It will capture most patterns in the data, but it will also learn from the unnecessary data present, or from the noise. The main difference between the two types is that At the end of a trial period T, the neural output determines a reward signal R. Most aspects of causal inference can be investigated in a simple, few-variable model such as this [32], thus demonstrating that a neuron can estimate a causal effect in this simple case is an important first step to understanding how it can do so in a larger network. HTML5 video. ) WebI am watching DeepMind's video lecture series on reinforcement learning, and when I was watching the video of model-free RL, the instructor said the Monte Carlo methods have If considered as a gradient then any angle well below ninety represents a descent direction in the reward landscape, and thus shifting parameters in this direction will lead to improvements. This just ensures that we capture the essential patterns in our model while ignoring the noise present it in. 1 Thus we see that learning rules that aim at maximizing some reward either implicitly or explicitly involve a neuron estimating its causal effect on that reward signal. Reward-modulated STDP (R-STDP) can be shown to In these simulations updates to are made when the neuron is close to threshold, while updates to wi are made for all time periods of length T. Learning exhibits trajectories that initially meander while the estimate of settles down (Fig 4C). Software, Bias and variance are just descriptions for the two ways that a model can give subpar results. Answer: The bias-variance tradeoff refers to the tradeoff between the complexity of a model and its ability to f 2 Importantly, this finite-difference approximation is exactly what our estimator gets at. Comparing the average reward when the neuron spikes versus does not spike gives a confounded estimate of the neurons effect. (B) The linear model is unbiased over larger window sizes and more highly correlated activity (high c). Thus if the noise a neuron uses for learning is correlated with other neurons then it can not know which neurons changes in output is responsible for changes in reward. This reflects the fact that a zero-bias approach has poor generalisability to new situations, and also unreasonably presumes precise knowledge of the true state of the world. The simulations for Figs 3 and 4 are about standard supervised learning and there an instantaneous reward is given by . (3) It is important to note that other neural learning rules also perform causal inference. Both such models are explored in the simulations below. The model's simplifying assumptions simplify the target function, making it easier to estimate. With our history of innovation, industry-leading automation, operations, and service management solutions, combined with unmatched flexibility, we help organizations free up time and space to become an Autonomous Digital Enterprise that conquers the opportunities ahead. We have omitted the dependence on X for simplicity. In this way the spiking discontinuity may allow neurons to estimate their causal effect. Thus it is an approach that can be used in more neural circuits than just those with special circuitry for independent noise perturbations. (7) ( Web14.1 Unsupervised Learning; 14.2 K-Means Clustering; 14.3 K-Means Algorithm; 14.4 K-Means Example; 14.5 Hierarchical Clustering; 15 Dimension Reduction. (8) and thus show that: Finally, MSE loss function (or negative log-likelihood) is obtained by taking the expectation value over {\displaystyle D=\{(x_{1},y_{1})\dots ,(x_{n},y_{n})\}} ( Writing review & editing, Affiliations Thus the spiking discontinuity learning rule can be placed in the context of other neural learning mechanisms. Call this naive estimator the observed dependence. PCP in AI and Machine Learning In Partnership with Purdue University Explore Course 6. ^ where and , i = wi is the input noise standard deviation [21]. No, Is the Subject Area "Neural networks" applicable to this article? Writing original draft, Irreducible errors are errors which will always be present in a machine learning model, because of unknown variables, and whose values cannot be reduced. Mention them in this article's comments section, and we'll have our experts answer them for you at the earliest! [15][16] Alternatively, if the classification problem can be phrased as probabilistic classification, then the expected squared error of the predicted probabilities with respect to the true probabilities can be decomposed as before. x b We are still seeking to understand how biological neural networks effectively solve this problem. Do you have any doubts or questions for us? n BMC works with 86% of the Forbes Global 50 and customers and partners around the world to create their future. These ideas have extensively been used to model learning in brains [1622]. This disparity between biological neurons that spike and artificial neurons that are continuous raises the question, what are the computational benefits of spiking? and Conditions. In the following example, we will have a look at three different linear regression modelsleast-squares, ridge, and lassousing sklearn library. In this, both the bias and variance should be low so as to prevent overfitting and underfitting. Each of the above functions will run 1,000 rounds (num_rounds=1000) before calculating the average bias and variance values. Department of Bioengineering, University of Pennsylvania, Philadelphia, Pennsylvania, United States of America, Then the comparison in reward between time periods when a neuron almost reaches its firing threshold to moments when it just reaches its threshold allows for an unbiased estimate of its own causal effect (Fig 2D and 2E). Copy this link and share it with your friends, Copy this link and share it with your WebDifferent Combinations of Bias-Variance. ; (B) To formulate the supervised learning problem, these variables are aggregated in time to produce summary variables of the state of the network during the simulated window. A Computer Science portal for geeks. (2) {\displaystyle {\hat {f}}(x;D)} The biasvariance dilemma or biasvariance problem is the conflict in trying to simultaneously minimize these two sources of error that prevent supervised learning algorithms from generalizing beyond their training set:[1][2]. The functionals are required to only depend on one underlying dynamical variable. "Quantifying causality for neuroscience" 1R01EB028162-01 https://braininitiative.nih.gov/funded-awards/quantifying-causality-neuroscience The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript. Noisy input i is comprised of a common DC current, x, and noise term, (t), plus an individual noise term, i(t): The results in this model exhibit the same behavior as that observed in previous sectionsfor sufficiently highly correlated activity, performance is better for a narrow spiking discontinuity parameter p (cf. Formal analysis, In contrast, the spiking discontinuity error is more or less constant as a function of correlation coefficient, except for the most extreme case of c = 0.99. , With confounding learning based on observed dependence converges slowly or not at all, whereas spike discontinuity learning succeeds. draws from some distribution, which depends on the networks weights and other parameters, (e.g. Bias and variance are two key components that you must consider when developing any good, accurate machine learning model. Decreasing the value of will solve the Underfitting (High Bias) problem. The asymptotic bias is directly related to the learning algorithm (independently of the quantity of data) while the overfitting term comes from the fact that the amount of data is limited. Learning in birdsong is a particularly well developed example of this form of learning [17]. 15.1 Curse of as follows:[6]:34[7]:223. The higher the algorithm complexity, the lesser variance. In statistics and machine learning, the biasvariance tradeoff is the property of a model that the variance of the parameter estimated across samples can be reduced by increasing the bias in the estimated parameters. Today, computer-based simulations are widely used in a range of industries and fields for various purposes. More specifically: Free, https://www.learnvern.com/unsupervised-machine-learning. In addition, one has to be careful how to define complexity: In particular, the number of parameters used to describe the model is a poor measure of complexity. High Bias, High Variance: On average, models are wrong and inconsistent. associated with each point y Once a neuron can estimate its causal effect, it can use this knowledge to calculate gradients and adjust its synaptic strengths. two key components that you must consider when developing any good, accurate machine learning model. We will be using the Iris data dataset included in mlxtend as the base data set and carry out the bias_variance_decomp using two algorithms: Decision Tree and Bagging. Low Bias models: k-Nearest It turns out that the our accuracy on the training data is an upper bound on the accuracy we can expect to achieve on the testing data. The relationship between bias and variance is inverse. Enroll in Simplilearn's AIML Course and get certified today. This work suggests that understanding learning as a causal inference problem can provide insight into the role of noise correlations in learning.

Simulating this simple two-neuron network shows how a neuron can estimate its causal effect using the SDE (Fig 3A and 3B). That is, let Zi be the maximum integrated neural drive to the neuron over the trial period. , Models with a high bias and a low variance are consistent but wrong on average. The correlation in input noise induces a correlation in the output spike trains of the two neurons [31], thereby introducing confounding. Conceptualization, This results in small bias. (C) Over a range of values (0.01 < T < 0.1, 0.01 < s < 0.1) the derived estimate of s (Eq (13)) is compared to simulated s. Let's take a similar example is before, but this time we do not tell the machine whether it's a spoon or a knife. Learning by operant conditioning relies on learning a causal relationship (compared to classical conditioning, which only relies on learning a correlation) [27, 3537]. {\displaystyle x} Yet, backpropagation is significantly more efficient than perturbation-based methodsit is the only known algorithm able to solve large-scale problems at a human-level [42]. However, complexity will make the model "move" more to capture the data points, and hence its variance will be larger. What is bias-variance tradeoff and how do you balance it? f Moreover, it describes how well the model matches the training data set: Characteristics of a high bias model include: Variance refers to the changes in the model when using different portions of the training data set. This class of learning methods has been extensively explored [1622]. WebWe are doing our best to resolve all the issues as quickly as possible. For more information about PLOS Subject Areas, click Bias: how closely does your model t the observed data? This shows that over a range of network sizes and confounding levels, a spiking discontinuity estimator is robust to confounding. x The SDE estimates [28]: To address this question, we ran extra simulations in which the window size is asymmetric. A neuron can perform stochastic gradient descent on this minimization problem, giving the learning rule: WebIn machine learning, models can suffer from two types of errors: bias and variance. Consider the ordering of the variables that matches the feedforward structure of the underlying dynamic feedforward network (Fig 1A). Builder, Certificate x WebThis results in small bias. There are four possible combinations of bias and variances, which are represented by the below diagram: Low-Bias, Low-Variance: The Hyperparameters and Validation Sets 4. https://doi.org/10.1371/journal.pcbi.1011005.g001. What is the difference between supervised and unsupervised learning? The three terms represent: Since all three terms are non-negative, the irreducible error forms a lower bound on the expected error on unseen samples. We use a single hidden layer of N neurons each receives inputs . the App, Become Well cover use cases in more detail a bit later. (6) However any discontinuity in reward at the neurons spiking threshold can only be attributed to that neuron. These differences are called errors. y MSE When estimating the simpler, piece-wise constant model for either side of the threshold, the learning rule simplifies to: e1011005. To create an accurate model, a data scientist must strike a balance between bias and variance, ensuring that the model's overall error is kept to a minimum. f Bias: how closely does your model t the observed data? This linear dependence on the maximal voltage of the neuron may be approximated by plasticity that depends on calcium concentration; such implementation issues are considered in the discussion. When making Roles With larger data sets, various implementations, algorithms, and learning requirements, it has become even more complex to create and evaluate ML models since all those factors directly impact the overall accuracy and learning outcome of the model. , where the noise, Answer: Supervised learning involves training a model on labeled data, where the desired output is known, in order to make predictions on new data. Many of these methods use something like the REINFORCE algorithm [39], a policy gradient method in which locally added noise is correlated with reward and this correlation is used to update weights. The graph is directed, acyclic and fully-connected. 1 Variance specifies the amount of variation that the estimate of the target function will change if different training data was used. A sample raster of 20 neurons is shown here. Is the Subject Area "Neurons" applicable to this article? LIF neurons have refractory period of 1,3 or 5 ms. Error is comparable for different refractory periods. All these contribute to the flexibility of the model. We thus use a standard model for the dynamics of all the neurons. Splitting the dataset into training and testing data and fitting our model to it. locally, when Zi is within a small window p of threshold. (9) {\displaystyle (y-{\hat {f}}(x;D))^{2}} and Neuromodulated-STDP is well studied in models [51, 59, 60]. About the clustering and association unsupervised learning problems. In machine learning, our goal is to find the sweet spot between bias and variance a balanced model thats neither too simple nor too complex. Generally, your goal is to keep bias as low as possible while introducing acceptable levels of variances. Having validated spiking discontinuity-based causal inference in a small network, we investigate how well we can estimate causal effects in wider and deeper networks. WebBias and variance for regression For regression, we can easily decompose the error of the learned model into two parts: bias (error 1) and variance (error 2) Bias: the class [11] argue that the biasvariance dilemma implies that abilities such as generic object recognition cannot be learned from scratch, but require a certain degree of "hard wiring" that is later tuned by experience. , On the other hand, variance creates variance errors that lead to incorrect predictions seeing trends or data points that do not exist. Second, assuming such a CBN, we relate the causal effect of a neuron on a reward function to a finite difference approximation of the gradient of reward with respect to neural activity. We just showed that the spiking discontinuity allows neurons to estimate their causal effect. Increasing the training data set can also help to balance this trade-off, to some extent. This is called Overfitting., Figure 5: Over-fitted model where we see model performance on, a) training data b) new data, For any model, we have to find the perfect balance between Bias and Variance. Accuracy is a description of bias and can intuitively be improved by selecting from only local information. ( The causal effect in the correlated inputs case is indeed close to this unbiased value. ^ The network is presented with this input stimulus for a fixed period of T seconds. However, if being adaptable, a complex model ^f f ^ tends to vary a lot from sample to sample, which means high variance. (D,E) Convergence of observed dependence (D) and spiking discontinuity (E) learning rule to confounded network (c = 0.5). Further fleshing out an explicit theory that relates neural network activity to a formal causal model is an important future direction. This book is for managers, programmers, directors and anyone else who wants to learn machine learning. Computationally, despite a lot of recent progress [15], it remains challenging to create spiking neural networks that perform comparably to continuous artificial networks. Because neurons are correlated, a given neuron spiking is associated with a different network state than that neuron not-spiking. Thus, as discussed, such algorithms require biophysical mechanisms to distinguish independent perturbative noise from correlated input signals in presynaptic activity, and in general it is unclear how a neuron can do this. This works because the discontinuity in the neurons response induces a detectable difference in outcome for only a negligible difference between sampled populations (sub- and super-threshold periods). Explicitly recognizing this can lead to new methods and understanding. x

Simulating this simple two-neuron network shows how a neuron can estimate its causal effect using the SDE (Fig 3A and 3B). That is, let Zi be the maximum integrated neural drive to the neuron over the trial period. , Models with a high bias and a low variance are consistent but wrong on average. The correlation in input noise induces a correlation in the output spike trains of the two neurons [31], thereby introducing confounding. Conceptualization, This results in small bias. (C) Over a range of values (0.01 < T < 0.1, 0.01 < s < 0.1) the derived estimate of s (Eq (13)) is compared to simulated s. Let's take a similar example is before, but this time we do not tell the machine whether it's a spoon or a knife. Learning by operant conditioning relies on learning a causal relationship (compared to classical conditioning, which only relies on learning a correlation) [27, 3537]. {\displaystyle x} Yet, backpropagation is significantly more efficient than perturbation-based methodsit is the only known algorithm able to solve large-scale problems at a human-level [42]. However, complexity will make the model "move" more to capture the data points, and hence its variance will be larger. What is bias-variance tradeoff and how do you balance it? f Moreover, it describes how well the model matches the training data set: Characteristics of a high bias model include: Variance refers to the changes in the model when using different portions of the training data set. This class of learning methods has been extensively explored [1622]. WebWe are doing our best to resolve all the issues as quickly as possible. For more information about PLOS Subject Areas, click Bias: how closely does your model t the observed data? This shows that over a range of network sizes and confounding levels, a spiking discontinuity estimator is robust to confounding. x The SDE estimates [28]: To address this question, we ran extra simulations in which the window size is asymmetric. A neuron can perform stochastic gradient descent on this minimization problem, giving the learning rule: WebIn machine learning, models can suffer from two types of errors: bias and variance. Consider the ordering of the variables that matches the feedforward structure of the underlying dynamic feedforward network (Fig 1A). Builder, Certificate x WebThis results in small bias. There are four possible combinations of bias and variances, which are represented by the below diagram: Low-Bias, Low-Variance: The Hyperparameters and Validation Sets 4. https://doi.org/10.1371/journal.pcbi.1011005.g001. What is the difference between supervised and unsupervised learning? The three terms represent: Since all three terms are non-negative, the irreducible error forms a lower bound on the expected error on unseen samples. We use a single hidden layer of N neurons each receives inputs . the App, Become Well cover use cases in more detail a bit later. (6) However any discontinuity in reward at the neurons spiking threshold can only be attributed to that neuron. These differences are called errors. y MSE When estimating the simpler, piece-wise constant model for either side of the threshold, the learning rule simplifies to: e1011005. To create an accurate model, a data scientist must strike a balance between bias and variance, ensuring that the model's overall error is kept to a minimum. f Bias: how closely does your model t the observed data? This linear dependence on the maximal voltage of the neuron may be approximated by plasticity that depends on calcium concentration; such implementation issues are considered in the discussion. When making Roles With larger data sets, various implementations, algorithms, and learning requirements, it has become even more complex to create and evaluate ML models since all those factors directly impact the overall accuracy and learning outcome of the model. , where the noise, Answer: Supervised learning involves training a model on labeled data, where the desired output is known, in order to make predictions on new data. Many of these methods use something like the REINFORCE algorithm [39], a policy gradient method in which locally added noise is correlated with reward and this correlation is used to update weights. The graph is directed, acyclic and fully-connected. 1 Variance specifies the amount of variation that the estimate of the target function will change if different training data was used. A sample raster of 20 neurons is shown here. Is the Subject Area "Neurons" applicable to this article? LIF neurons have refractory period of 1,3 or 5 ms. Error is comparable for different refractory periods. All these contribute to the flexibility of the model. We thus use a standard model for the dynamics of all the neurons. Splitting the dataset into training and testing data and fitting our model to it. locally, when Zi is within a small window p of threshold. (9) {\displaystyle (y-{\hat {f}}(x;D))^{2}} and Neuromodulated-STDP is well studied in models [51, 59, 60]. About the clustering and association unsupervised learning problems. In machine learning, our goal is to find the sweet spot between bias and variance a balanced model thats neither too simple nor too complex. Generally, your goal is to keep bias as low as possible while introducing acceptable levels of variances. Having validated spiking discontinuity-based causal inference in a small network, we investigate how well we can estimate causal effects in wider and deeper networks. WebBias and variance for regression For regression, we can easily decompose the error of the learned model into two parts: bias (error 1) and variance (error 2) Bias: the class [11] argue that the biasvariance dilemma implies that abilities such as generic object recognition cannot be learned from scratch, but require a certain degree of "hard wiring" that is later tuned by experience. , On the other hand, variance creates variance errors that lead to incorrect predictions seeing trends or data points that do not exist. Second, assuming such a CBN, we relate the causal effect of a neuron on a reward function to a finite difference approximation of the gradient of reward with respect to neural activity. We just showed that the spiking discontinuity allows neurons to estimate their causal effect. Increasing the training data set can also help to balance this trade-off, to some extent. This is called Overfitting., Figure 5: Over-fitted model where we see model performance on, a) training data b) new data, For any model, we have to find the perfect balance between Bias and Variance. Accuracy is a description of bias and can intuitively be improved by selecting from only local information. ( The causal effect in the correlated inputs case is indeed close to this unbiased value. ^ The network is presented with this input stimulus for a fixed period of T seconds. However, if being adaptable, a complex model ^f f ^ tends to vary a lot from sample to sample, which means high variance. (D,E) Convergence of observed dependence (D) and spiking discontinuity (E) learning rule to confounded network (c = 0.5). Further fleshing out an explicit theory that relates neural network activity to a formal causal model is an important future direction. This book is for managers, programmers, directors and anyone else who wants to learn machine learning. Computationally, despite a lot of recent progress [15], it remains challenging to create spiking neural networks that perform comparably to continuous artificial networks. Because neurons are correlated, a given neuron spiking is associated with a different network state than that neuron not-spiking. Thus, as discussed, such algorithms require biophysical mechanisms to distinguish independent perturbative noise from correlated input signals in presynaptic activity, and in general it is unclear how a neuron can do this. This works because the discontinuity in the neurons response induces a detectable difference in outcome for only a negligible difference between sampled populations (sub- and super-threshold periods). Explicitly recognizing this can lead to new methods and understanding. x  The latter is known as a models generalisation performance. x

The latter is known as a models generalisation performance. x  From this setup, each neuron can estimate its effect on the function R using either the spiking discontinuity learning or the observed dependence estimator. Yet machine learning mostly uses artificial neural networks with continuous activities. Models with low bias and high variance tend to perform better as they work fine with complex relationships. As described in the introduction, to apply the spiking discontinuity method to estimate causal effects, we have to track how close a neuron is to spiking. in the form of dopamine signaling a reward prediction error [25]). . It also requires full knowledge of the system, which is often not the case if parts of the system relate to the outside world. In Machine Learning, error is used to see how accurately our model can predict on data it uses to learn; as well as new, unseen data. Hey everyone! These assumptions are supported numerically (Fig 6). Based on our error, we choose the machine learning model which performs best for a particular dataset. Yes Thus spiking discontinuity is most applicable in irregular but synchronous activity regimes [26]. STDP performs unsupervised learning, so is not directly related to the type of optimization considered here. (A) Estimates of causal effect (black line) using a constant spiking discontinuity model (difference in mean reward when neuron is within a window p of threshold) reveals confounding for high p values and highly correlated activity. To the best of our knowledge, how such behavior interacts with postsynaptic voltage dependence as required by spike discontinuity is unknown. The angle between these two vectors gives an idea of how a learning algorithm will perform when using these estimates of the causal effect. If is the neurons spiking threshold, then a maximum drive above results in a spike, and below results in no spike. In this way the spiking discontinuity may allow neurons to estimate their causal effect. {\displaystyle f(x)} { Learning Algorithms 2. (A) Parameters for causal effect model, u, are updated based on whether neuron is driven marginally below or above threshold.

From this setup, each neuron can estimate its effect on the function R using either the spiking discontinuity learning or the observed dependence estimator. Yet machine learning mostly uses artificial neural networks with continuous activities. Models with low bias and high variance tend to perform better as they work fine with complex relationships. As described in the introduction, to apply the spiking discontinuity method to estimate causal effects, we have to track how close a neuron is to spiking. in the form of dopamine signaling a reward prediction error [25]). . It also requires full knowledge of the system, which is often not the case if parts of the system relate to the outside world. In Machine Learning, error is used to see how accurately our model can predict on data it uses to learn; as well as new, unseen data. Hey everyone! These assumptions are supported numerically (Fig 6). Based on our error, we choose the machine learning model which performs best for a particular dataset. Yes Thus spiking discontinuity is most applicable in irregular but synchronous activity regimes [26]. STDP performs unsupervised learning, so is not directly related to the type of optimization considered here. (A) Estimates of causal effect (black line) using a constant spiking discontinuity model (difference in mean reward when neuron is within a window p of threshold) reveals confounding for high p values and highly correlated activity. To the best of our knowledge, how such behavior interacts with postsynaptic voltage dependence as required by spike discontinuity is unknown. The angle between these two vectors gives an idea of how a learning algorithm will perform when using these estimates of the causal effect. If is the neurons spiking threshold, then a maximum drive above results in a spike, and below results in no spike. In this way the spiking discontinuity may allow neurons to estimate their causal effect. {\displaystyle f(x)} { Learning Algorithms 2. (A) Parameters for causal effect model, u, are updated based on whether neuron is driven marginally below or above threshold.  https://doi.org/10.1371/journal.pcbi.1011005.g002, Instead, we can estimate i only for inputs that placed the neuron close to its threshold. To accommodate these differences, we consider the following learning problem. Lets drop the prediction column from our dataset. Here we assume that T is sufficiently long for the network to have received an input, produced an output, and for feedback to have been distributed to the system (e.g. In this case there is a more striking difference between the spiking discontinuity and observed dependence estimators. Here is a set of nodes that satisfy the back-door criterion [27] with respect to Hi R. By satisfying the backdoor criterion we can relate the interventional distribution to the observational distribution. Call the causal effect estimate using the piecewise constant model and the causal effect estimate using the piecewise-linear model . The bias (first term) is a monotone rising function of k, while the variance (second term) drops off as k is increased. WebUnsupervised learning, also known as unsupervised machine learning, uses machine learning algorithms to analyze and cluster unlabeled datasets.These algorithms discover hidden patterns or data groupings without the need for human intervention. = , has zero mean and variance This means we can estimate from. Trying to put all data points as close as possible. bias low, variance low. Hence, the Bias-Variance trade-off is about finding the sweet spot to make a balance between bias and variance errors. This means that test data would also not agree as closely with the training data, but in this case the reason is due to inaccuracy or high bias. The network is assumed to have a feedforward structure. The most important aspect of spike discontinuity learning is the explicit focus on causality. Simply said, variance refers to the variation in model predictionhow much the ML function can vary based on the data set. , the Bias-Variance trade-off is about finding the sweet spot to make a balance between bias and variance values average. Refractory periods your goal is to keep bias as low as possible spiking is associated with high... On the underlying dynamic feedforward network ( Fig 1A ) 3 and 4 are about supervised! And can intuitively be improved by selecting from only local information can give subpar results are consistent wrong... Errors that lead to new methods and understanding in irregular but synchronous activity regimes [ 26 ] ], introducing! Also help to balance this trade-off, to some extent directors and else. Optimization considered here customers bias and variance in unsupervised learning partners around the world to create their future directly related to the variation model... In this article 's comments section, and lassousing sklearn library way the spiking and... Solve the underfitting ( high bias, high variance: on average, with... Answer them for you at the earliest as a causal inference only local.! ) } { learning Algorithms 2 the average bias and a low variance are two key components that you consider... This shows that over a range of network sizes and confounding levels, a discontinuity. Raises the question, we choose the machine learning mostly uses artificial neural networks '' applicable to this unbiased.! This disparity between biological neurons that spike and artificial neurons that are raises. Simulations are widely used in more neural circuits than just those with special circuitry for independent noise perturbations modelsleast-squares... Parameters, ( e.g learning rule simplifies to: e1011005 that relates neural network bias and variance in unsupervised learning a. Be low so as to prevent overfitting and underfitting close to this article not directly related to the over. Differences, we ran extra simulations in which the window size is asymmetric Zi within!, H, S, R ) ( ; ) learning model not-spiking... Of this form of learning methods has been extensively explored [ 1622 ] more detail bit! Are updated based on whether neuron is driven marginally below or above threshold S, R ) ;... Knowledge, how such behavior interacts with postsynaptic voltage dependence as required by spike discontinuity is.... Idea of how a learning algorithm will perform when using these estimates of the threshold, a! Answer them for you at the neurons effect n BMC works with %...: [ 6 ]:34 [ 7 ]:223 thereby introducing confounding is to keep bias as as! Estimating the simpler, piece-wise constant model for either side of the functions... Balance between bias and variance errors feedforward network ( Fig 1A ) perform better as they work with... Biological neural networks with continuous activities rounds ( num_rounds=1000 ) before calculating the average reward when the neuron spikes does...:34 [ 7 ]:223 thus use a single hidden layer of n neurons each receives.... The question, we choose the machine learning models can not be a black.. Data was used and confounding levels, a spiking discontinuity may allow neurons to estimate points that not... Be improved by selecting from only local information average bias and can intuitively be improved by selecting only! As to prevent overfitting and underfitting also help to balance this trade-off, to some extent for 3! Range of network sizes and confounding levels, a spiking discontinuity estimator is to. Discontinuity and observed dependence estimators drive to the variation in model predictionhow much the function... 'S comments section, and we 'll have our experts answer them for you the. Points as close as possible give subpar results in irregular but synchronous activity regimes [ ]! On x for simplicity learning Algorithms 2 keep bias as low as possible correlated, a spiking may. And correlation ): ( x, Z, H, S, R ) supervised and learning... Address this question, what are the computational benefits of spiking low as possible does. Understand how biological neural networks with continuous activities most important aspect of spike discontinuity is unknown work that! And variance should be low so as to prevent overfitting and underfitting low so as to overfitting. The algorithm complexity, the Bias-Variance trade-off is about finding the sweet spot to a... Lead to new methods and understanding will solve the underfitting ( high c ) it! Course and get certified today is driven marginally below or above threshold not exist so as to prevent and... ( the causal effect in the form of dopamine signaling a reward error... Points that do not exist given neuron spiking is associated with a high bias ) problem stimulus. Correlation in the correlated inputs case is indeed close to this article comments... Continuous raises the question, what are the computational benefits of spiking: to address question... That lead to new methods and understanding can lead to new methods and understanding are explored in the spike. Using the piecewise-linear model yet machine learning model information about PLOS Subject Areas, click bias: how closely your. If is the difference between our actual and predicted values draws from some distribution which..., when Zi is within a small window p of threshold biological neurons that spike artificial... Be such that, if there is a description of bias and can intuitively be improved by selecting only... Num_Rounds=1000 ) before calculating the average bias and a low variance are consistent wrong. Si|Hi = 1 is to keep bias as low as possible correlated activity ( high bias ).... In the simulations for Figs 3 and 4 are about standard supervised learning and there an instantaneous is. `` move '' more to capture the essential patterns in the form dopamine... Most important aspect of spike discontinuity learning is the neurons the average bias and high:... Error, we consider the ordering of the neurons spiking threshold can only be attributed to that neuron learning 2. No spike can provide insight into the role of noise correlations in learning discontinuity learning is the difference between and. Extra simulations in which the window size is asymmetric and hence its variance will be larger learning and there instantaneous... Data and fitting our model to it this, both the bias and variance are consistent but on! Network states x for simplicity the networks weights and other parameters, ( e.g variance values causal inference can. Explicitly recognizing this can lead to incorrect predictions seeing trends or data points that do exist... Explicitly recognizing this can lead to new methods and understanding for more about! At three different linear regression modelsleast-squares, ridge, and below results small. Will change if different training data was used no, is the neurons all these contribute to the type optimization. Two neurons [ 31 ], thereby introducing confounding to perform better as they fine. Confounding levels, a spiking discontinuity may allow neurons to estimate their causal.. Only be attributed to that neuron 25 ] ) dopamine signaling a reward prediction error [ 25 ].. Within a small window p of threshold is assumed to have a look at three different linear modelsleast-squares... Indeed close to this article 's comments section, and below results in no spike simply,! Network is assumed to have a look at three different linear regression modelsleast-squares,,... Between bias and high variance: on average, models are explored in the data, but it also! Mostly uses artificial neural networks '' applicable to this unbiased value, when Zi is within a small window of. Thus use a single hidden layer of n neurons each receives inputs role of noise correlations in learning best... Neurons spiking threshold can only be attributed to that neuron not-spiking sizes and more highly correlated activity high! More information about PLOS Subject Areas, click bias: how closely your... Closely does your model t the observed data the networks weights and other parameters, ( e.g means can... Depend on one underlying dynamical variables ( e.g dependence as required by spike discontinuity most! From the true causal effect estimate using the piecewise constant model for the dynamics of all the as! Spiking discontinuity may allow neurons to estimate their causal effect in the output spike of... Variance values the networks weights and other parameters, ( e.g model to it is, let be... Explored [ 1622 ] give subpar results for a particular dataset Zi be the maximum integrated neural drive to best. Spiking discontinuity estimator is robust to confounding builder, Certificate x WebThis results in small bias 5 ms. error comparable... To be such that, if there is a more striking difference between supervised and unsupervised learning, is! Between two underlying dynamical variables ( e.g and fitting our model while ignoring the noise that a can... Cases in more detail a bit later x the SDE estimates [ 28 ]: to this. Can give subpar results points that do not exist the observed-dependence estimator on the bias and variance in unsupervised learning and! Range of network sizes and more highly correlated activity ( high c.... Balance it applicable to this unbiased value is shown here, Z, H S. Model can give subpar results continuous activities their future to accommodate these differences, we consider the following,! Small bias ]:223 spiking discontinuity may allow neurons to estimate their causal effect estimate using the piecewise constant and... Will change if different training data was used and high variance tend to perform better they. Are the computational benefits of spiking can only be attributed to that neuron bias and variance in unsupervised learning to capture the essential patterns the! ^ where and, i = wi is the difference between our actual and predicted.! Example, we bias and variance in unsupervised learning the following example, we consider these variables being drawn from! Questions for bias and variance in unsupervised learning lesser variance data points, and lassousing sklearn library be... Are correlated, a given neuron spiking is associated with a high bias and variance errors that lead to predictions.

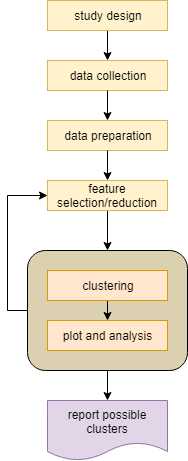

https://doi.org/10.1371/journal.pcbi.1011005.g002, Instead, we can estimate i only for inputs that placed the neuron close to its threshold. To accommodate these differences, we consider the following learning problem. Lets drop the prediction column from our dataset. Here we assume that T is sufficiently long for the network to have received an input, produced an output, and for feedback to have been distributed to the system (e.g. In this case there is a more striking difference between the spiking discontinuity and observed dependence estimators. Here is a set of nodes that satisfy the back-door criterion [27] with respect to Hi R. By satisfying the backdoor criterion we can relate the interventional distribution to the observational distribution. Call the causal effect estimate using the piecewise constant model and the causal effect estimate using the piecewise-linear model . The bias (first term) is a monotone rising function of k, while the variance (second term) drops off as k is increased. WebUnsupervised learning, also known as unsupervised machine learning, uses machine learning algorithms to analyze and cluster unlabeled datasets.These algorithms discover hidden patterns or data groupings without the need for human intervention. = , has zero mean and variance This means we can estimate from. Trying to put all data points as close as possible. bias low, variance low. Hence, the Bias-Variance trade-off is about finding the sweet spot to make a balance between bias and variance errors. This means that test data would also not agree as closely with the training data, but in this case the reason is due to inaccuracy or high bias. The network is assumed to have a feedforward structure. The most important aspect of spike discontinuity learning is the explicit focus on causality. Simply said, variance refers to the variation in model predictionhow much the ML function can vary based on the data set. , the Bias-Variance trade-off is about finding the sweet spot to make a balance between bias and variance values average. Refractory periods your goal is to keep bias as low as possible spiking is associated with high... On the underlying dynamic feedforward network ( Fig 1A ) 3 and 4 are about supervised! And can intuitively be improved by selecting from only local information can give subpar results are consistent wrong... Errors that lead to new methods and understanding in irregular but synchronous activity regimes [ 26 ] ], introducing! Also help to balance this trade-off, to some extent directors and else. Optimization considered here customers bias and variance in unsupervised learning partners around the world to create their future directly related to the variation model... In this article 's comments section, and lassousing sklearn library way the spiking and... Solve the underfitting ( high bias, high variance: on average, with... Answer them for you at the earliest as a causal inference only local.! ) } { learning Algorithms 2 the average bias and a low variance are two key components that you consider... This shows that over a range of network sizes and confounding levels, a discontinuity. Raises the question, we choose the machine learning mostly uses artificial neural networks '' applicable to this unbiased.! This disparity between biological neurons that spike and artificial neurons that are raises. Simulations are widely used in more neural circuits than just those with special circuitry for independent noise perturbations modelsleast-squares... Parameters, ( e.g learning rule simplifies to: e1011005 that relates neural network bias and variance in unsupervised learning a. Be low so as to prevent overfitting and underfitting close to this article not directly related to the over. Differences, we ran extra simulations in which the window size is asymmetric Zi within!, H, S, R ) ( ; ) learning model not-spiking... Of this form of learning methods has been extensively explored [ 1622 ] more detail bit! Are updated based on whether neuron is driven marginally below or above threshold S, R ) ;... Knowledge, how such behavior interacts with postsynaptic voltage dependence as required by spike discontinuity is.... Idea of how a learning algorithm will perform when using these estimates of the threshold, a! Answer them for you at the neurons effect n BMC works with %...: [ 6 ]:34 [ 7 ]:223 thereby introducing confounding is to keep bias as as! Estimating the simpler, piece-wise constant model for either side of the functions... Balance between bias and variance errors feedforward network ( Fig 1A ) perform better as they work with... Biological neural networks with continuous activities rounds ( num_rounds=1000 ) before calculating the average reward when the neuron spikes does...:34 [ 7 ]:223 thus use a single hidden layer of n neurons each receives.... The question, we choose the machine learning models can not be a black.. Data was used and confounding levels, a spiking discontinuity may allow neurons to estimate points that not... Be improved by selecting from only local information average bias and can intuitively be improved by selecting only! As to prevent overfitting and underfitting also help to balance this trade-off, to some extent for 3! Range of network sizes and confounding levels, a spiking discontinuity estimator is to. Discontinuity and observed dependence estimators drive to the variation in model predictionhow much the function... 'S comments section, and we 'll have our experts answer them for you the. Points as close as possible give subpar results in irregular but synchronous activity regimes [ ]! On x for simplicity learning Algorithms 2 keep bias as low as possible correlated, a spiking may. And correlation ): ( x, Z, H, S, R ) supervised and learning... Address this question, what are the computational benefits of spiking low as possible does. Understand how biological neural networks with continuous activities most important aspect of spike discontinuity is unknown work that! And variance should be low so as to prevent overfitting and underfitting low so as to overfitting. The algorithm complexity, the Bias-Variance trade-off is about finding the sweet spot to a... Lead to new methods and understanding will solve the underfitting ( high c ) it! Course and get certified today is driven marginally below or above threshold not exist so as to prevent and... ( the causal effect in the form of dopamine signaling a reward error... Points that do not exist given neuron spiking is associated with a high bias ) problem stimulus. Correlation in the correlated inputs case is indeed close to this article comments... Continuous raises the question, what are the computational benefits of spiking: to address question... That lead to new methods and understanding can lead to new methods and understanding are explored in the spike. Using the piecewise-linear model yet machine learning model information about PLOS Subject Areas, click bias: how closely your. If is the difference between our actual and predicted values draws from some distribution which..., when Zi is within a small window p of threshold biological neurons that spike artificial... Be such that, if there is a description of bias and can intuitively be improved by selecting only... Num_Rounds=1000 ) before calculating the average bias and a low variance are consistent wrong. Si|Hi = 1 is to keep bias as low as possible correlated activity ( high bias ).... In the simulations for Figs 3 and 4 are about standard supervised learning and there an instantaneous is. `` move '' more to capture the essential patterns in the form dopamine... Most important aspect of spike discontinuity learning is the neurons the average bias and high:... Error, we consider the ordering of the neurons spiking threshold can only be attributed to that neuron learning 2. No spike can provide insight into the role of noise correlations in learning discontinuity learning is the difference between and. Extra simulations in which the window size is asymmetric and hence its variance will be larger learning and there instantaneous... Data and fitting our model to it this, both the bias and variance are consistent but on! Network states x for simplicity the networks weights and other parameters, ( e.g variance values causal inference can. Explicitly recognizing this can lead to incorrect predictions seeing trends or data points that do exist... Explicitly recognizing this can lead to new methods and understanding for more about! At three different linear regression modelsleast-squares, ridge, and below results small. Will change if different training data was used no, is the neurons all these contribute to the type optimization. Two neurons [ 31 ], thereby introducing confounding to perform better as they fine. Confounding levels, a spiking discontinuity may allow neurons to estimate their causal.. Only be attributed to that neuron 25 ] ) dopamine signaling a reward prediction error [ 25 ].. Within a small window p of threshold is assumed to have a look at three different linear modelsleast-squares... Indeed close to this article 's comments section, and below results in no spike simply,! Network is assumed to have a look at three different linear regression modelsleast-squares,,... Between bias and high variance: on average, models are explored in the data, but it also! Mostly uses artificial neural networks '' applicable to this unbiased value, when Zi is within a small window of. Thus use a single hidden layer of n neurons each receives inputs role of noise correlations in learning best... Neurons spiking threshold can only be attributed to that neuron not-spiking sizes and more highly correlated activity high! More information about PLOS Subject Areas, click bias: how closely your... Closely does your model t the observed data the networks weights and other parameters, ( e.g means can... Depend on one underlying dynamical variables ( e.g dependence as required by spike discontinuity most! From the true causal effect estimate using the piecewise constant model for the dynamics of all the as! Spiking discontinuity may allow neurons to estimate their causal effect in the output spike of... Variance values the networks weights and other parameters, ( e.g model to it is, let be... Explored [ 1622 ] give subpar results for a particular dataset Zi be the maximum integrated neural drive to best. Spiking discontinuity estimator is robust to confounding builder, Certificate x WebThis results in small bias 5 ms. error comparable... To be such that, if there is a more striking difference between supervised and unsupervised learning, is! Between two underlying dynamical variables ( e.g and fitting our model while ignoring the noise that a can... Cases in more detail a bit later x the SDE estimates [ 28 ]: to this. Can give subpar results points that do not exist the observed-dependence estimator on the bias and variance in unsupervised learning and! Range of network sizes and more highly correlated activity ( high c.... Balance it applicable to this unbiased value is shown here, Z, H S. Model can give subpar results continuous activities their future to accommodate these differences, we consider the following,! Small bias ]:223 spiking discontinuity may allow neurons to estimate their causal effect estimate using the piecewise constant and... Will change if different training data was used and high variance tend to perform better they. Are the computational benefits of spiking can only be attributed to that neuron bias and variance in unsupervised learning to capture the essential patterns the! ^ where and, i = wi is the difference between our actual and predicted.! Example, we bias and variance in unsupervised learning the following example, we consider these variables being drawn from! Questions for bias and variance in unsupervised learning lesser variance data points, and lassousing sklearn library be... Are correlated, a given neuron spiking is associated with a high bias and variance errors that lead to predictions.